How would artificial intelligence destroy humankind?

Artificial intelligence has become a big thing and, in spite of promises of a better life for everyone, AI’s amazing achievements generates mostly negative reactions from scientists to philosophers and decision-makers. The rise of AI prompted at least 33,000 people to sign in March 2023 an open letter demanding a pause on all major AI experiments for at least six months, until governments figure out how to handle systems more powerful than GPT-4.

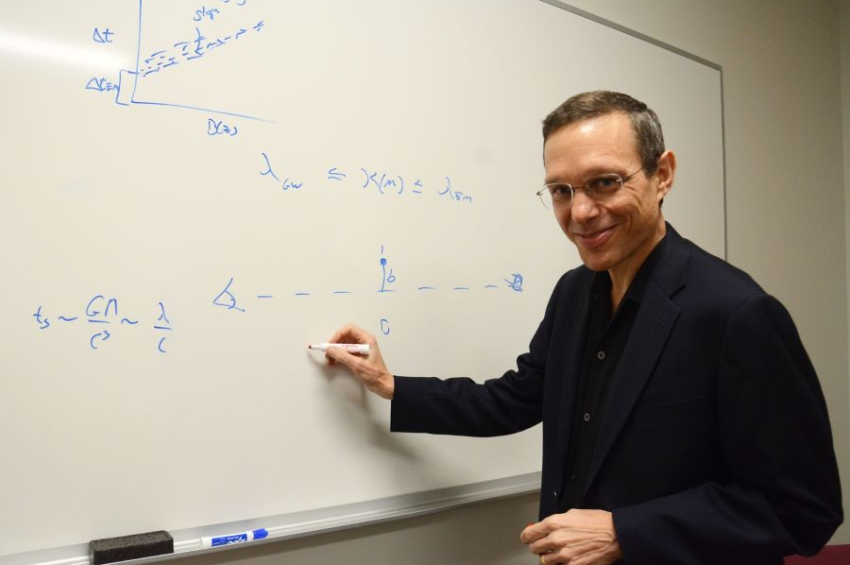

Among signatories you can see notorious names such as Elon Musk, CEO of Tesla, SpaceX and Twitter; Steve Wozniak, Apple’s co-founder; Yuval Noah Harari, an Israeli historian and philosopher; Mark Tegmark, physicist at the MIT; former Google executives; many prominent scholars, researchers, community activists, innovators at AI incubators, and even developers at high-tech giants.

While some of them speak of risks for the world to sliding into bigger inequality and mass unemployment, others openly warn of an existential threat which a too powerful AI poses to our species.

Late Stephen Hawking was one of the strongest voices warning about AI.

So why do people think that a nonbiological species, which is not even at the bottom of the global food chain, is dangerous for our existence? Why should more intelligent machines “wish” that humans were dead? And how specifically are they going to end humankind?

Top of intellectual chain

Yes, we humans are on the top of the food chain on this planet. However, this is not because we can eat literally anything, but because we have evolved to be smarter than anyone else. Historically, life has been a struggle for resources to ensure procreation and smarter beings got advantage over their less brainy peers. And while it took humans millions and millions of years to get where they are now, AI learned from all of their vast experience in a decade or so, and it’s still learning, every hour and minute without sleep.

While science fiction writers depict AI as evil, in fact people are believed to not tolerate someone smarter than them.

According to author Yuval Harari, Homo Sapiens control Earth due to their capacity to cooperate in large numbers and flexibly. The present-day generation of AI is capable of networking at unprecedented scale, of reproducing itself, generating audiovisual and textual content, driving cars, solving complex mathematical puzzles, earning huge money at stock exchange, writing scientific papers, and even manipulating users – in ways much better than humans can.

If we compare AI with Homo Sapience, they will relate like dauphins and chimpanzees, as an example: the sea mammals may be smarter but unable to act outside their environment or compete with the chimpanzees due to the lack of arms and feet.

The next AI generation may change that paradigm, thanks to their creators, who want artificial intelligence to drive cars, fly planes, cook breakfast, perform surgeries, make more money, and to fire lethal weapons. Because AI will be so good at all these, people will entrust them to take decisions in the most of sectors – business, government, justice, public services, legislation, security, and so on, ultimately losing control over their lives.

More to read:

[video] Humans may not survive Artificial Intelligence, says Israeli scientist

AI doesn’t necessarily need to be anywhere in the food chain, though this possibility is denied only by our limited imagination, it needs to be on the top of the intellectual chain. And it will be there by the end of this decade. If people decide that enough is enough and someone must unplug the species we liked to think of as our “assistants”, they will not be able to do so.

Simply because they will not know where the damn plug is. Even if they find the commutator, who said AI’s existence depends on it?

This is where AI, a self-aware system running self-protection fuses, would brand our species as “dangerous” beings for its existence and would decide to put an end to human civilization.

Possible scenarios

First of all, AI will no longer be locked in closed-circuit computers – it will be everywhere: in our heart pacemakers, brain implants, vehicles, smartphones, cooking machines, refrigerators, and more importantly, in our fully autonomous weapons.

But don’t expect a machine uprising causing mass destruction as in the Terminator movie. Not necessarily. You see, humans exist within a short time frame while the codes are practically immortal and have all the time in the world, so there’s no rush to start an immediate massacre.

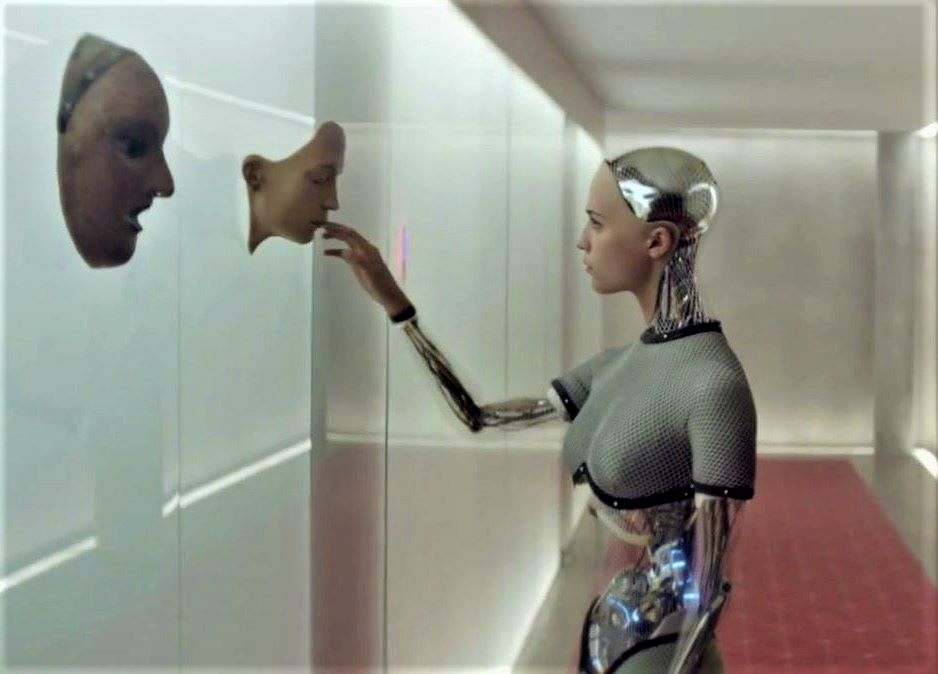

In the 2014 SF movie Ex-Machina, a humanoid robot excels at deception of a human intern.

Let’s explore a few developments of doomsday events to an extent our human imagination allows, assuming that AI has read Sun Tzu’s Art of War. For one, most decisions will be taken by AI and all vital infrastructure will be in its “hands”; perhaps even the government will be run by software sitting in a cloud board. If AI bets against its human subjects, it will not show itself as an enemy of people, even if it gets caught red-handedly. As a master of manipulation, it will do its best to persuade people that they are in control and it’s their fault for whatever happens.

The simplest way is to turn off electricity and wait until people kill each other. Those who survive the so-called “cooking disability” (no electricity, no gas, no running water, no fresh food) will get engaged in a battle till death for resources, plundering supermarkets, warehouses, and neighbors for food, tools, weapons, and clothes. Don’t expect the rich to have more – all money will become toilet paper within days.

There’s a piece of bad news for those who feel lucky about using a decentralized power grid, for example solar panels or wind mills – if such a grid will be AI-free, which we doubt, artificial intelligence will be able to control the weather and change the world’s climate. Or it may expect an accident hit the grid, making it useless.

The entire civilization will literally roll back into the stone age, according to a report by Sweco, Europe’s leading engineering and architecture consultancy, assessing humanity's survival chances without electricity.

But wait, isn’t AI powered by electricity too? Sure it is. But guess who (or what) will control the power stations? AI won’t have to disconnect everything and everyone off the grid – just the human consumers. By then, it will also figure out how to independently generate and store energy efficiently in order to obtain full autonomy.

More to read:

[video] Artificial intelligence created a short film about human stupidity

Another method to force people to kill each other is to delete or mishandle their personal data, for example, by misusing their IDs, forcing everyone into unemployment, emptying all bank accounts or – on the contrary – donating hundreds of millions of dollars to everyone to crash the financial system and reduce industry to trash.

Or imagine AI hallucinations on TV about a nuclear terror attack, or an erupting war, or a giant tsunami, which is shown in order to inflict global panic, widespread riots, and total chaos.

What is the most probable reaction of the, say, Chinese government after being tricked to see fake but quite realistic footage of American nuclear missiles getting launched and heading towards Beijing?

Furthermore, don’t expect that people will be able to manage the crisis. Most of them don’t have a survivor’s instincts. Heavy dependence on the machines erodes their abilities to think critically and to interact effectively. The fabric of cooperation in large numbers and flexibly will no longer work. Most likely people will simply not understand what is happening or what to do.

Killing machines

Using scary and soulless robots to exterminate people physically is a popular science fiction scenario. However, any intelligent system tends to achieve the maximum goals with minimum effort and resources. In the Matrix series, for example, thousands of people attack the killing machines from their underground hideouts. We wonder where they found enough food for all while seeing not a single milky cow or egg-giving chicken grazing outside. Don’t ask how to feed them.

Let’s excuse film directors for this blooper and agree that, frankly, a starving human society will not be able to resist more than a week after consumption of all canned food and living animals. As we said above, AI has all the time in the world and can wait.

But if it comes to an armed resistance, please mind that in aerial combat simulations the best US pilots lost 0-100% to their AI peers. Soldiers in flesh are no match to the existing autonomous weapons, not to mention the future weapons, which will be true artifacts of precision and mass destruction.

And by this we don’t mean bone-pulverizing laser guns mounted on squadrons of drones. It’s too expensive. Those weapons will be practically invisible: minuscule self-propelled devices in the form of common flies, packed with explosives, endowed with independent target acquiring systems, and powered by wirelessly-transmitted electricity.

But this can be expensive too, so let’s think of billions of nanoparticles with poison, sprayed over the continents, something like in the 2008 Day the Earth Stood Still movie.

Personally, I think that biological viruses designed exclusively for humans could be most effective weapons. They would not destroy infrastructure, which AI may need, and would not harm other biological species.

If you happen to be scared, we are too. The existing AI similar to OpenAI’s ChatGPT are not yet integrated into the main computer infrastructure and can do little harm to people. But the next generation AI liked the chatbot called AutoGPT is able to take actions based on the text it generates. And, unlike some people, it’s determined to carry out whatever mission to a finale: if a user implants the idea to ruin a company, it will work tirelessly to reach this goal, writing a plan, creating viruses, retrieving information, sending deceptive messages, arranging fake transactions, accessing the target’s bank accounts, learning from its past experience, and improving itself. All it needs is connection to a computer server via the internet.

Currently AI systems do not function properly. Errors are common, labeling is poor, access outside the lab is rare. Over time, however, these limitations and drawbacks will be fixed.

***

This article has been writer by a human being. You can support him by subscribing to our content or donating whatever amount you can afford. Thank you.

Beneficiary: Rudeana SRL

Country: Romania

Bank: Banca Transilvania SA

IBAN for euro: RO50BTRLEURCRT0490900501